Tanushree Banerjee

I'm Tanushree (she/her), a PhD student in Computer Science at the University of Maryland, College Park under Prof. Matthias Zwicker.

Previously, I graduated from Princeton with a BSE in Computer Science. I conducted my senior thesis under Prof. Felix Heide in the Princeton Computational Imaging Lab on explainable 3D perception via inverse generation, for which I received an Outstanding Computer Science Senior Thesis Prize.

Earlier during my undergraduate studies, I worked under Prof. Karthik Narasimhan in the Princeton Natural Language Processing Group and Prof. Olga Russakovsky in the Princeton Visual AI Lab.

Research

My research lies at the intersection of computer vision, computer graphics, machine learning, and optimization, focusing on leveraging generative priors for explainable 3D perception.

Some research questions that inspire my current work:

- Can we leverage priors learned in generative models to interpret 3D/4D information from everyday 2D videos and photographs?

- How can we reformulate visual perception as an inverse generation problem?

* indicates equal contribution. Representative projects are highlighted

Towards Generalizable and Interpretable Three-Dimensional Tracking with Inverse Neural Rendering

Extending our previous arXiv preprint, which recasts 3D multi-object tracking from RGB cameras as an Inverse Rendering (IR) problem, to object classes other than vehicles and using several different generator models to showcase the generalization ability of our method.

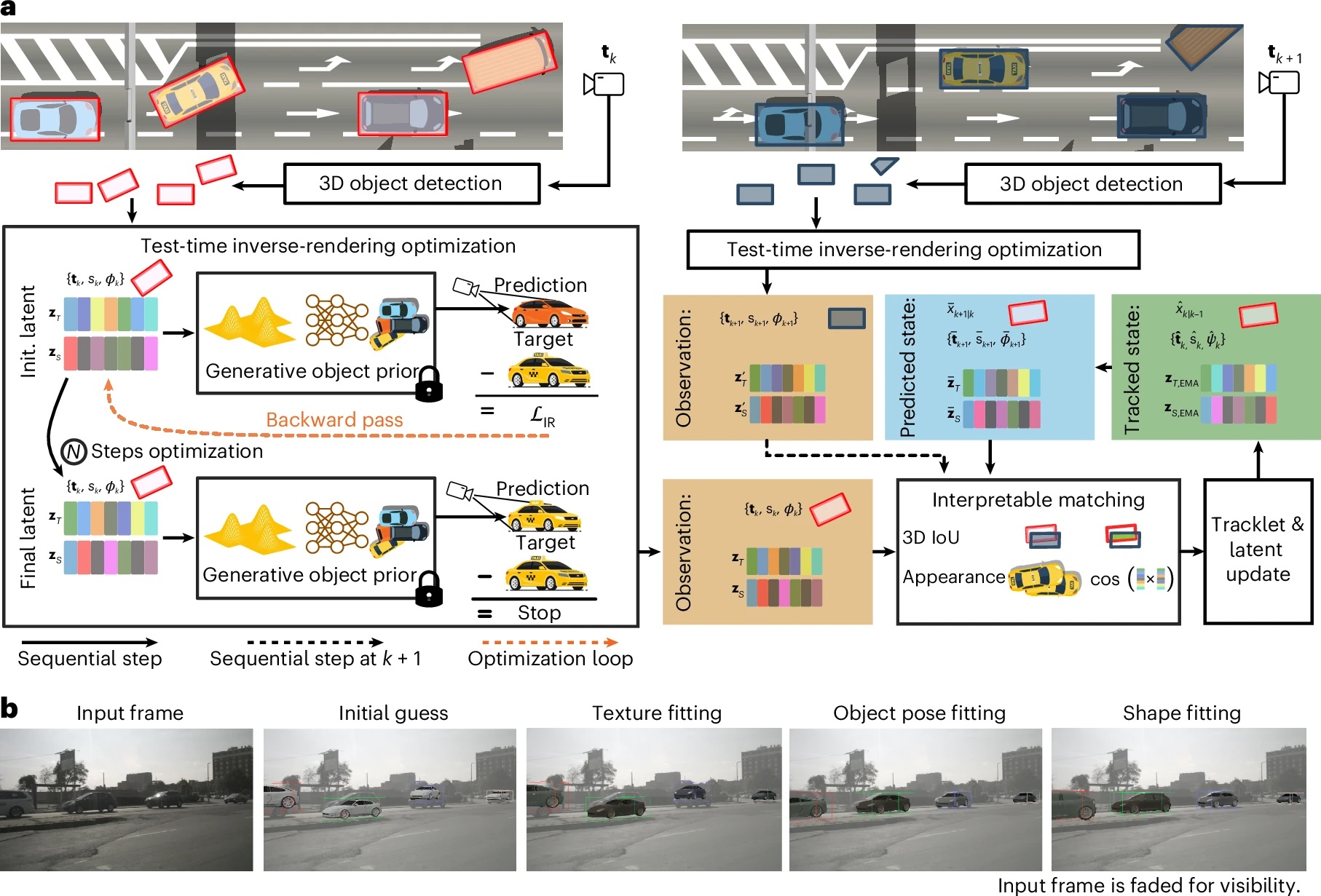

Inverse Neural Rendering for Explainable Multi-Object Tracking

We propose to recast 3D multi-object tracking from RGB cameras as an Inverse Rendering (IR) problem by optimizing via a differentiable rendering pipeline over the latent space of pre-trained 3D object representations and retrieving the latents that best represent object instances in a given input image. Our method is not only an alternate take on tracking; it enables examining the generated objects, reasoning about failure situations, and resolving ambiguous cases.

LLMs are Superior Feedback Providers: Bootstrapping Reasoning for Lie Detection with Self-Generated Feedback

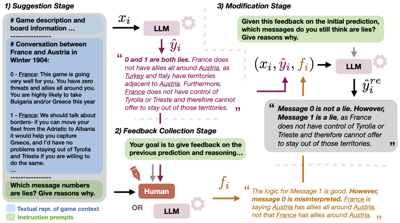

We investigated a bootstrapping framework that leverages self-generated feedback for detecting deception in Diplomacy games by collecting a novel dataset of human feedback on initial predictions and comparing the modification stage performance when using human feedback rather than LLM-generated feedback. Our LLM-generated feedback-based approach achieved superior performance, with a 39% improvement over the zero-shot baseline in lying-F1 without any training required.

Teaching & Outreach

I have been incredibly fortunate to receive tremendous support during my studies from faculty mentors and peers to pursue a research career in computer science. I am keen to use my position to help empower others to pursue careers in computer science. This section includes my teaching and outreach endeavors to help address the acute diversity crisis in this field.

As a UCA, I held office hours for students in the independent work seminar for juniors and seniors on AI for Engineering and Physics taught by Prof. Ryan Adams, helping them debug their code and advising them on their semester-long independent work projects.

As an instructor, I developed workshops and Colab tutorials leading up to an NLP-based capstone project for this ML summer camp for high school students from underrepresented backgrounds, focusing on the potential for harm perpetuated by large language models. I also organized guest speaker talks to expose students to diverse applications of ML in non-traditional fields.